Back when I was studying music, I regularly used Tom Erbe’s soundhack freeware to perform a variety of audio mangling tasks, chiefly convolution. The output was often unpredictable, and helped convey something about the input audio that’s difficult to elicit otherwise. It was also much more accessible than other software on the market at the time, a true feat in the days before that processing could be performed on chips small enough to get thrown into Eurorack modules.

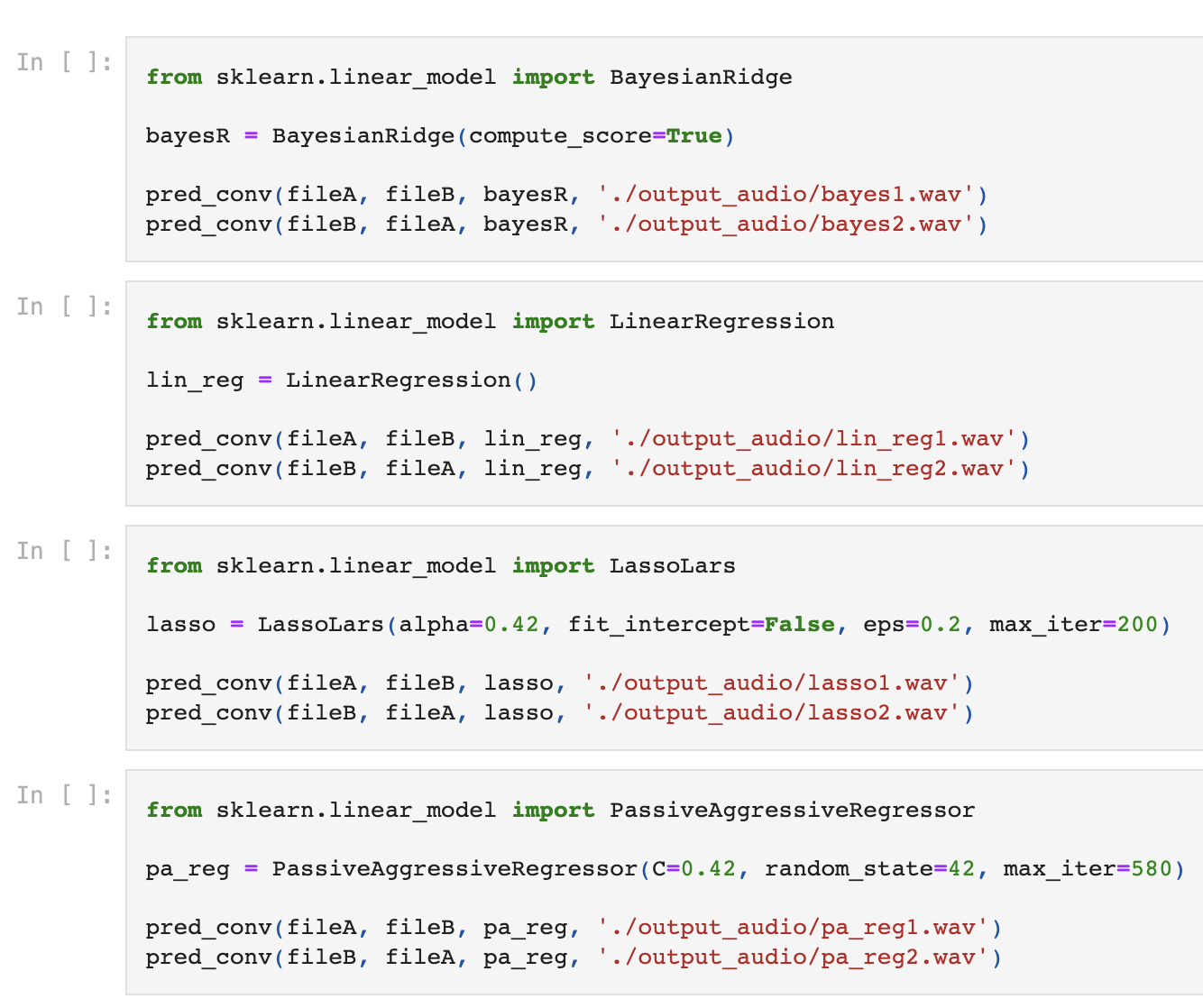

Around 2018, Apple made adjustments to their kernel which rendered applications like SoundHack inoperable. I was interested in exploring machine learning, particularly for time-ordered sets, and audio convolution seemed like an interesting way to explore the tool, so I tried it out.

The models and implementations I used were extremely rudimentary. I first trained each model on an input audio file separated into frequency bands using a FFT, associating the relationship between band magnitude and phase, and complex real and imaginary values with the actual voltage level (raw magintude) of the original audio file. Then, given another audio file, I give the machine raw magnitude at a regular time interval of the file and have it predict the real and imaginary output of a fictitious FFT, which I then run through an iFFT to generate a final output list of raw magnitudes - a new audio file.

Different machines had very different results. It may not be the most useful tool, given that machine learning tools are much more powerful now and Tom Erbe has got his tool running on Macs again, but it’s an interesting approach to creating new sounds and textures nonetheless.